The Haptic Radar / Extended Skin Project

Summary

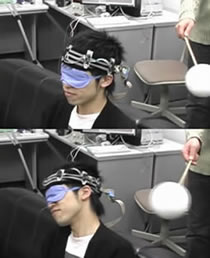

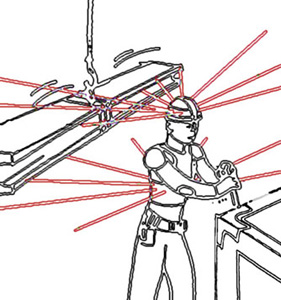

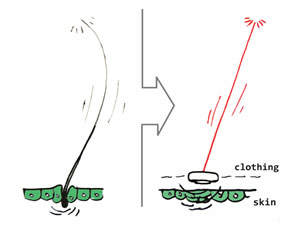

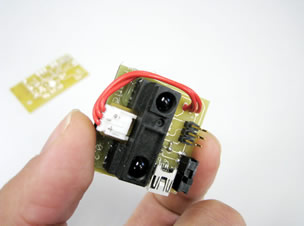

We are developing a wearable and modular device allowing users to perceive and respond to spatial information using haptic cues in an intuitive and unobtrusive way. The system is composed of an array of "optical-hair modules", each of which senses range information and transduces it as an appropriate vibro-tactile cue on the skin directly beneath it (the module can be embedded on clothes or strapped to body parts as in the figures below). An analogy for our artificial sensory system in the animal world would be the cellular cilia, insect antennae, as well as the specialized sensory hairs of mammalian whiskers. In the future, this modular interface may cover precise skin regions or be distributed in over the entire body surface and then function as a double-skin with enhanced and tunable sensing capabilities. We speculate that for a particular category of tasks (such as clear path finding and collision avoidance), the efficiency of this type of sensory transduction may be greater than what can be expected from more classical vision-to-tactile substitution systems. Among the targeted applications of this interface are visual prosthetics for the blind, augmentation of spatial awareness in hazardous working environments, as well as enhanced obstacle awareness for car drivers (in this case the extended-skin sensors may cover the surface of the car).

|

|

|

|

|

In a word, what we are proposing here is to build artificial, wearable, light-based hairs (or antennae, see figures below). The actual hair stem will be an invisible, steerable laser beam. In the near future, we may be able to create on-chip, skin-implantable whiskers using MOEMS technology. Results in a similar direction have been already achieved in the framework of the smart laser scanner project in our lab. Our first prototype (in the shape of a haptic headband) uses of-the-shelf components (arduino microcontroller and sharp IR rangefinders), and provides the wearer with 360 degrees of spatial awareness. It had very positive reviews in our proof-of-principle experiments, including a test on fifthy real blind people (results yet to publish).

|

|

|

Movies

- Prototype proof-of-principle collision-avoidance experiment (April 2006)

- Computer-controlled headband simulator (virtual maze)

- Independent module demo

References

Papers:- A. Cassinelli, E. Sampaio, S.B. Joffily, H.R.S. Lima and B.P.G.R. Gusmão, Do blind people move more confidently with the Tactile Radar? IOS Press, Technology and Disability, Vol. 26, N.2-3, pp:161-170, (2014) [PDF-775KB]

- Cassinelli Alvaro, Reynolds Carson and Ishikawa Masatoshi : Augmenting spatial awareness with Haptic Radar, Tenth International Symposium on Wearable Computers(ISWC) (Montreux, 2006.10.11-14)/Short paper(4 pages) [PDF-103KB] Slide presentation [ PPT-6.4MB]. Unpublished long version (6 pages) [ PDF-268KB]

- Cassinelli Alvaro, Reynolds Carson and Ishikawa Masatoshi : Haptic Radar, The 33rd International Conference and Exhibition on Computer Graphics and Interactive Techniques(SIGGRAPH) (Boston, 2006.8.1)/ [PDF-202KB,

- More detailed project web page : http://ishikawa-vision.org/perception/HapticRadar/HapticRadar_LongPage.html

- "Haptic Radar"featured at NTV Japan Television ("Sekaiichi Uketai Jugyou", Japan Television, 2008.06.07

- "Laser Scanner Reads Air Writing", article on Discovery Channel.com (Sept. 1, 2005)

- Daily Planet (Discovery Channel Canada ), about "Haptic Radar"

- ITV1 British Television, with Joanna Lumley as "Catwoman", aired on Sunday 6th and Sunday 13th September 2009.

- Exhibited at Ars Electronica - Tokyo University Campus Exhibition: HYBRID EGO (2008).

- Exhibited at new Ars Electronica Center (AEC, Linz) as part of the permanent exhibition New Views of Humankind/Main Gallery/Robolab (2009-2011).