ChAff

Summary

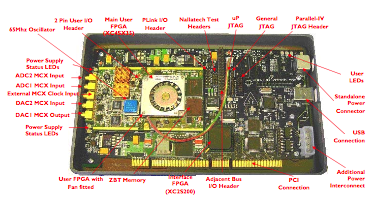

Imagine a robot clumsily interrupts a meeting. This disturbance causes the speaker to vocally and angrily chastise the robot's behavior. Further imagine that the robot was able to react by apologizing and changing its behavior. To realize this scenario, the goal of the ChAff project is to design an FPGA to classify speech in real-time according to prosodic information.

The approach taken is to build upon existing features related to prosody. By performing simulations of real-time speech analysis we are able to find algorithms that are expedient. Following simulation, register transfer level representations of the prosody classifications are synthesized and run on a FPGA.

Currently, the system computes real-time estimates of speaking rate (syllables per second), pitch (fundamental frequency), and loudness (in dB). Future work centers on classifying the resulting trajectories in rate-pitch-loudness space.

Reference

- Reynolds, C., Ishikawa, M. and Tsujino, H. (2006) Realizing Affect in Speech Classification in Real-Time. Aurally Informed Performance: Integrating Machine Listening and Auditory Presentation in Robotic Systems, In conjunction with AAAI Fall Symposia, October 13 - 15, 2006, Washington, D.C., USA. [PDF]