High-Speed Real-time Range Finding by Projecting Structured Light Field

Summary

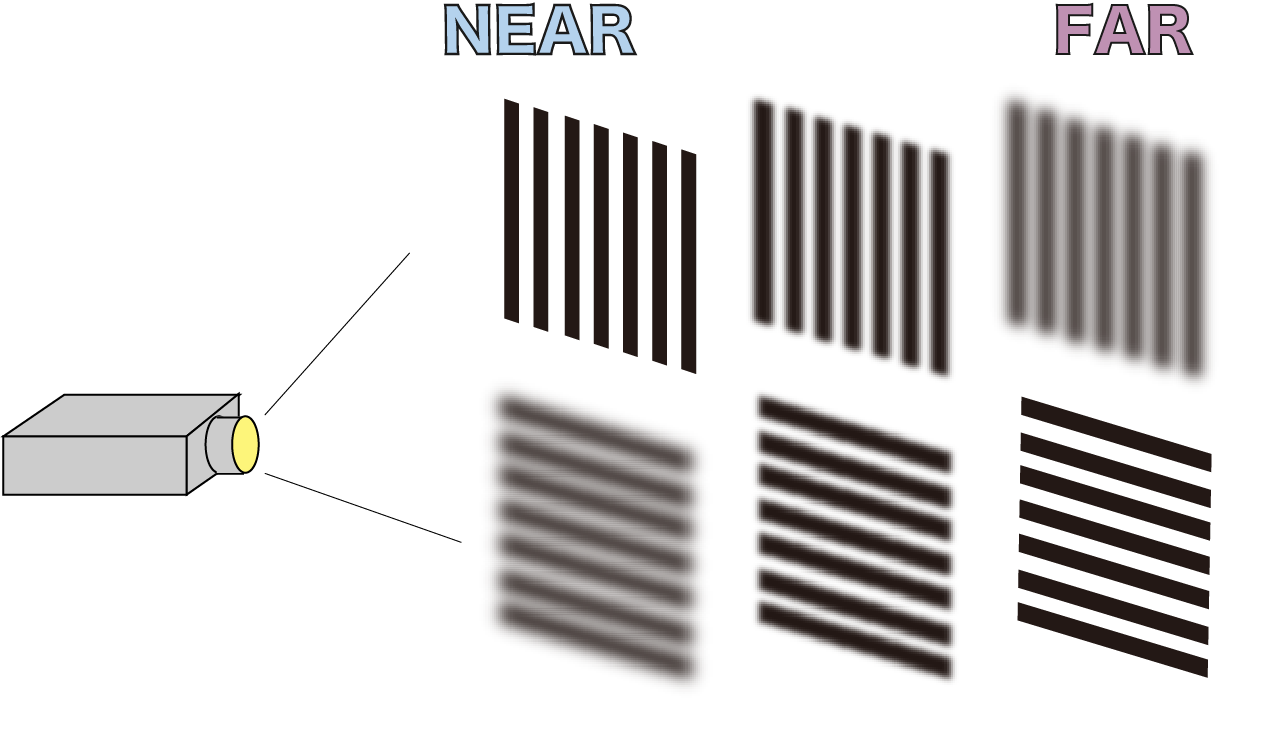

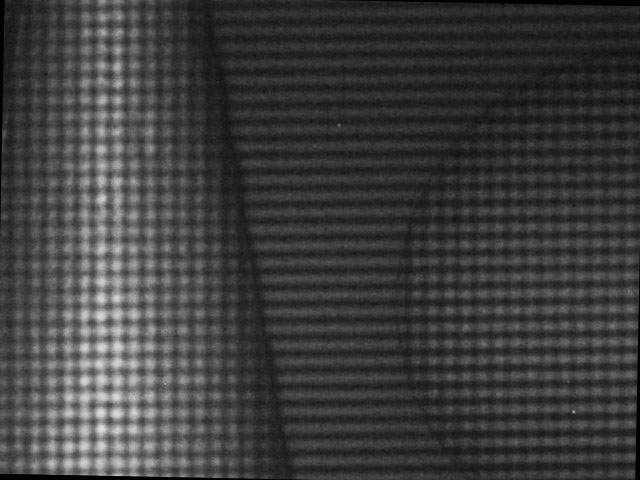

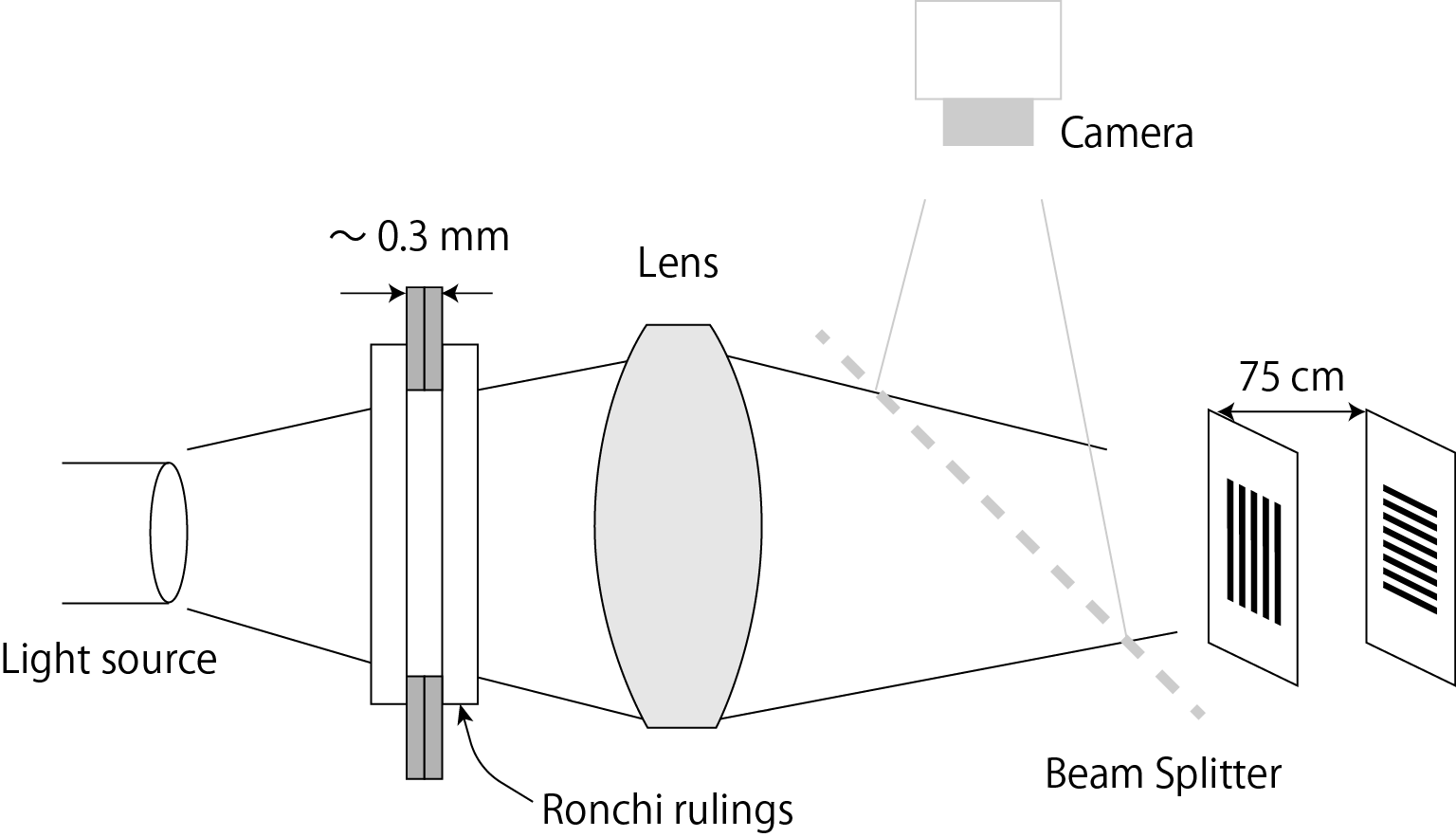

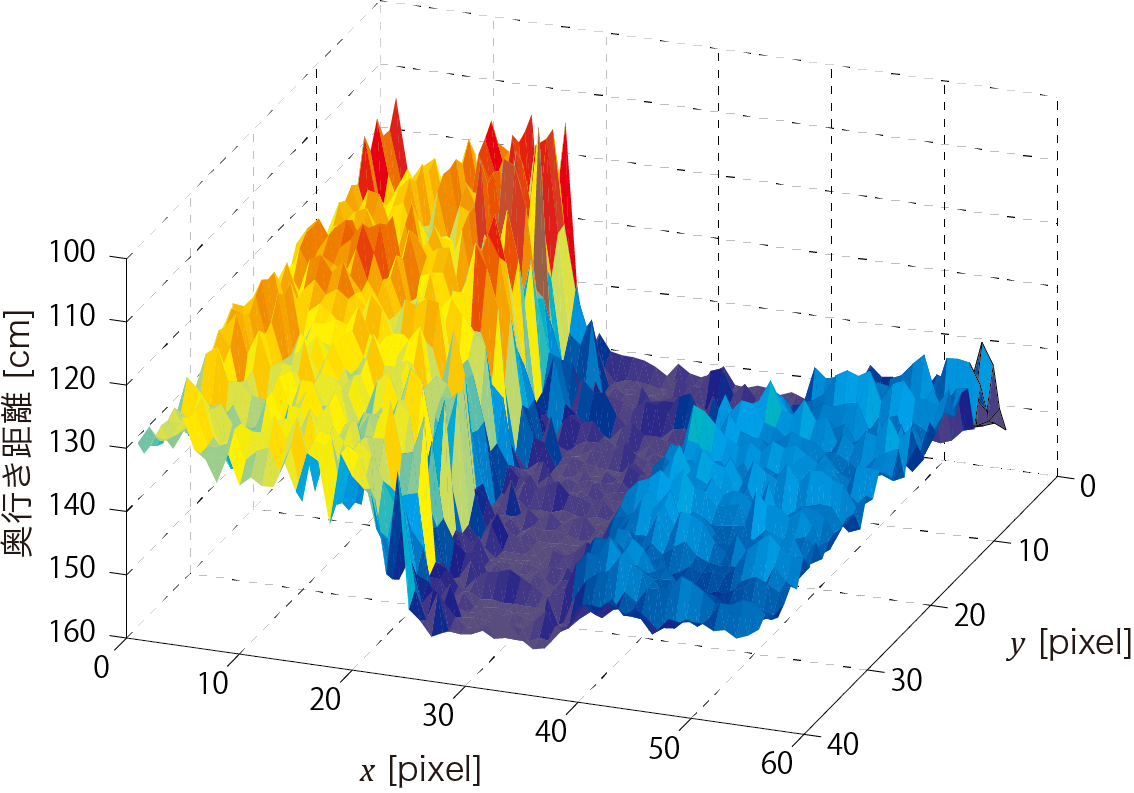

Real-time depth estimation is used in many applications, such as motion capture and human--computer interfaces. However, conventional depth estimation algorithms, including stereo matching or Depth from Defocus, use optimization or pattern matching methods to calculate the depth from the captured images, making it difficult to adapt these methods to high-speed sensing. In this work, we propose a high-speed and real-time depth estimation method using structured light that varies according to the projected depth. Vertical and horizontal stripe patterns are illuminated, and focus is placed at the near and far planes respectively. The distance from the projector is calculated from the ratio of the amount of the blur due to defocus(bokeh) between the stripes projected on the object surface. This method needs no optimization, and the calculation is straightforward. Therefore, it achieves high-speed depth estimation without parallel processing hardware, such as FPGAs and GPUs.

A prototype system of this method calculates the depth map in 0.2 ms for 2,500 sampling points using an off-the-shelf personal computer. Although the depth resolution and accuracy are not on par with conventional methods, the background and the foreground can be separated using the calculated depth information. To verify the speed and the accuracy, we incorporated the method in a high speed gaze controller, called Saccade Mirror. It captures an image every 2 ms, and shifts the camera direction according to the position of the target object. Conventional depth estimation methods cannot be adopted to the Saccade Mirror because of their computational costs. The proposed method on the other hand, detects moving objects by separating foreground from background, and achieves successful tracking of objects with the same color as the background.

|

|

|

|

|

|