FTIR based calibration of Airborne Ultrasound Tactile Display and camera system

Summary

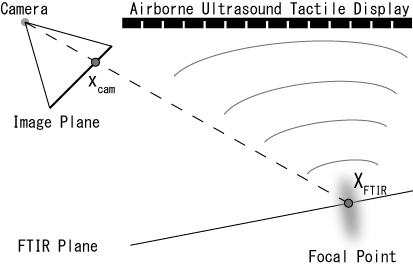

A system is proposed that can provide visual and tactile information on moving surfaces such as hands and papers without delay. The system is composed of two subsystems. The first one provides high-speed tracking and projection with optical axis control. The second one, "Airborne Ultrasound Tactile Display (AUTD)" can remotely project tactile feedback on our hands. The AUTD is developed by Shinoda Laboratory, Department of Complexity Science and Engineering Graduate School of Frontier Sciences, Department of Information Physics and Computing, the University of Tokyo.

The system provides high-speed and unconstrained Augmented Reality using visual and tactile feedback. In order to eliminate mismatch between the different feedback modalities, a calibration method that aligns the components is needed. In particular, the focal point of the AUTD, which provide tactile sensation with acoustic radiation pressure at the focal point generated at an arbitrary position in the air, is invisible to general cameras. Therefore, it is difficult to apply conventional calibration methods by observing displayed information directly by cameras in a way analogue to how a projector-camera systems are calibrated.

For a simple and high-precision calibration between AUTD and cameras,

we propose a method to visualize the acoustic radiation pressure at the focal point by exploiting a phenomenon called Frustrated Total Internal Reflection (FTIR).

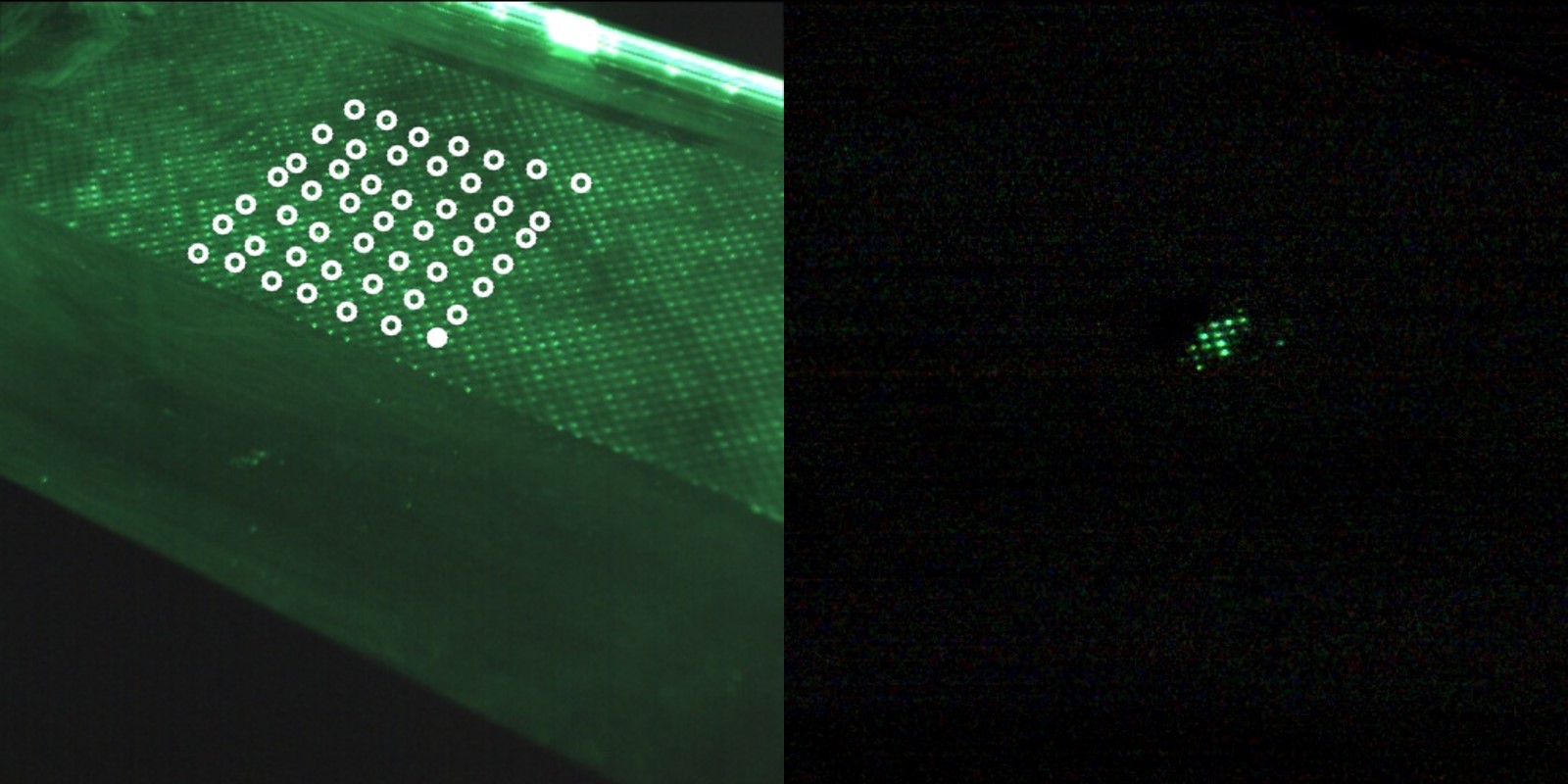

When pressure is applied to a membrane that covers an acrylic sheet which illuminated from the side,

the light leaks out as a result of an attenuation of the total reflection that otherwise keeps the light inside the sheet.

This occurs since the contact area between the acrylic surface and the membrane increases at the pressurized position.

This light spot is observable and indicates the focal position. By using certain patterns of such focal points, calibration between the AUTD and the cameras is possible.

In contrast to methods where e.g. a human hand or a single microphone is used to subjectively identify the AUTD focal point,

the FTIR-based method enables structured 2-dimensional search of the focal point in the camera image.

It reduces the manual workload and is effective in obtaining an environment where multi-modal information can be presented flexibly with high precision.

|

|

|

|

Reference

- Tomohiro Sueishi, Keisuke Hasegawa, Kohei Okumura, Hiromasa Oku, Hiroyuki Shinoda and Masatoshi Ishikawa : High-speed Dynamic Information Environment with Airborne Ultrasound Tactile Display-Camera System and The Calibration Method, Transactions of the Virtual Reality Society of Japan, Vol.19, No. 2, pp.173-183 (2014) (Japanese)