High-Resolution Surface Reconstruction based on Multi-level Implicit Surface from Multiple Range Images

Summary

Sensing the 3D shape of a dynamic scene is not a trivial problem, but it is useful for various applications. Recently, sensing systems have been improved and are now capable of high sampling rates. However, particularly for dynamic scenes, there is a limit to improving the resolution at high sampling rates. In this paper, we present a method for improving the resolution of a 3D shape reconstructed from multiple range images acquired from a moving target. In our approach, the alignment and surface estimation problems are solved in a simultaneous estimation framework. Together with the use of an adaptive multi-level implicit surface for shape representation, this allows us to calculate the alignment by using shape features and surface estimation according to the amount of movement of the point clouds for each range image. By doing so, this approach realized simultaneous estimation more precisely than a scheme involving mere alternating estimation of shape and alignment. We present results of experiments for evaluating the reconstruction accuracy with different point cloud densities and noise levels.

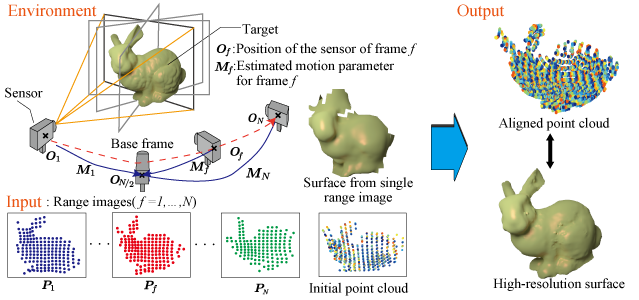

Fig. 1 Concept of high-resolution surface reconstruction, measument environment and the reconstructed surface of Stanford bunny.

Fig. 2 Result of experiment using actual-data.

(Left:Orignal surface. Center:Surface reconstructed using a single frame. Right: Surface reconstructed by proposed method using 30 frames.)

Related Research

Reference

- Shohei Noguchi, Yoshihiro Watanabe, Masatoshi Ishikawa: High-resolution Surface Reconstruction based on Multi-level Implicit Surface from Multiple Range Images, IPSJ Transactions on Computer Vision and Applications, Vol.5, pp.143-152, August, 2013. [link]

- Shohei Noguchi, Yoshihiro Watanabe, Masatoshi Ishikawa: High-Resolution Surface Reconstruction based on Multi-level Implicit Surface from Multiple Range Images, IEEE International Conference on Image Processing (ICIP2013)(Melbourne, Sep 16, 2013) / Proceedings, pp. 2140-2144.