|

Main artists: Alvaro Cassinelli & Daito Manabe / Contributors: Yusaku Kuribara, Alexis Zerroug & Luc Foubert Check slide presentation [PDF -11MB]. |

Demo videos

|

|

Publications:

|

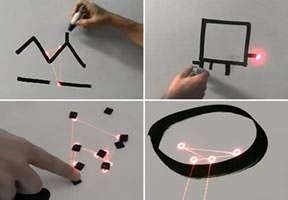

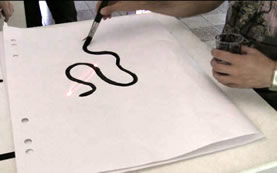

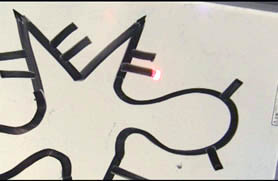

A laser-based artificial synesthesia instrument"scoreLight" is a prototype musical instrument capable of generating sound in real time from the lines of doodles as well as from the contours of three-dimensional objects nearby (hands, dancer's silhouette, architectural details, etc). There is no camera nor projector: a laser spot explores the shape as a pick-up head would search for sound over the surface of a vinyl record - with the significant difference that the groove is generated by the contours of the drawing itself. The light beam follows these countours in the very same way a blind person uses a white cane to stick to a guidance route on the street. Details of this tracking technique can be found here. Sound is produced and modulated according to the curvature of the lines being followed, their angle with respect to the vertical as well as their color and contrast. This means that "scoreLight" implements gesture, shape and color-to-sound artificial synesthesia [4]; abrupt changes in the direction of the lines produce trigger discrete sounds (percussion, glitches), thus creating a rhythmic base (the length of a closed path determines the overall tempo). |

Artist StatementA previous installation (called "Sticky Light") called into question the role of light as a passive substance used for contemplating a painting. Illumination is not a passive ingredient of the observation process: the quality of the light, its relative position and angle fundamentally affect the nature of the perceived image. "Sticky Light" exaggerated this by making light a living element of the painting. "scoreLight" introduces another sensorial modality. It not only transform graphical patterns into temporal rhythms, but also makes audible more subtle elements such as the smoothness/ roughness of the artist's stroke, the texture of the line, etc. This installation is an artistic approach to artificial sensory substitution research and artificial synesthesia very much along the lines of Golan Levin's works in the field [3]. I particular, it can be seen as the reverse (in a procedural sense) of the interacting scheme of Golan Levin & Zach Liebermann "Messa di Voce" [2], in which the speed and direction of a curve continuosly being drawn on a screen is controlled by to the pitch and volume of the sound (usually voice) captured by a microphone nearby. |

|

Finally, it is interesting to note that the purity of the laser light and the fluidity of the motion makes for an unique interactive experience that cannot be reproduced by the classic camera/projector setup. It is also possible to project the light over buildings tens or hundred of meters away - and then "read aloud" the city landcape. |

Technical StatementThe piece is based upon a 3d tracking technology developped at the Ishikawa-Komuro laboratory in 2003, using a laser diode, a pair of steering mirrors, and a single non-imaging photodetector called the "smart laser scanner" (for details, see here). The hardware is very unique: since there is no camera nor projector (with pixellated sensors or light sources), tracking as well as motion can be extremely smooth and fluid. |

|

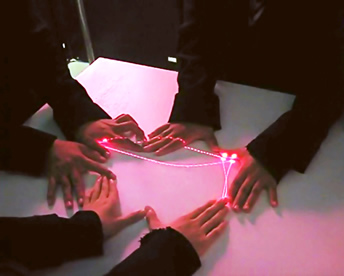

Installation setupThe setup can be easily configured for interaction on an horizontal surface as was done for the Sticky Light project, or a vertical surface (a wall or a large white board for people to create graffitis or draw doodles). When using the system on a table , the laser power is less than half a milliwatt - half the power of a not very powerful laser pointer. More powerful, multicolored laser sources can be used in order to "augment" (visually and with sound) facades of buildings tens of meters away. Sound is also spatialized (see quadrophonic setup below); panning is controlled by the relative position of the tracking spots, their speed and acceleration.

|

|

Modes of interaction and sound generation (work in progress)The preferred mode of operation is contour following. Each connected component of the image will function as sound sequencers. Sequences can be recorded and reused in the form of drawings (on stickers for instance). The sound is modulated in the following ways:

Other modes of operation include:

Although it is interesting to "hear" any kind of drawing, more control can be found by "recording" interesting patterns and reusing them. Recording is here graphical: stickers for instance can be used as tracks or effects (very much like in the reacTable [10] interface). These can be arranged relative to each other, their relative distance mutually affecting their sounds as explained above (for instance, the "track" represented as a drawing on a sticker could be used to modulate the volume of the sound produced by another sticker). |

|

|

|

|

| It is still too early to decide whether this system can be effectively used as a muscial instrument or not (has it enough expressivity? can we find a right balance between control and randomness?). However, it is interesting to note that "scoreLight", in its present form, already unveils an unexpected direction of (artistic?) research: the user does not really knows if he/she is painting or composing music. Indeed, the interrelation and (real-time) feedback between sound and visuals is so strong that one is tempted to coin a new term for the performance since it is not drawing nor is it playing (music), but both things at the same time... drawplaying? |

Exhibition history

|

|

References:

|

Credit details:Main artists:

Acknowledgments:

|

Contact

Alvaro Cassinelli, Assistant Professor Department of Information Physics and Computing.

The University of Tokyo. 7-3-1 Hongo, Bunkyo-ku, Tokyo 113-0033 Japan. |

|